UKPGAN: A General Self-Supervised Keypoint Detector

Yang You, Wenhai Liu, Yanjie Ze, Yong-Lu Li, Weiming Wang, Cewu Lu

CVPR 2022

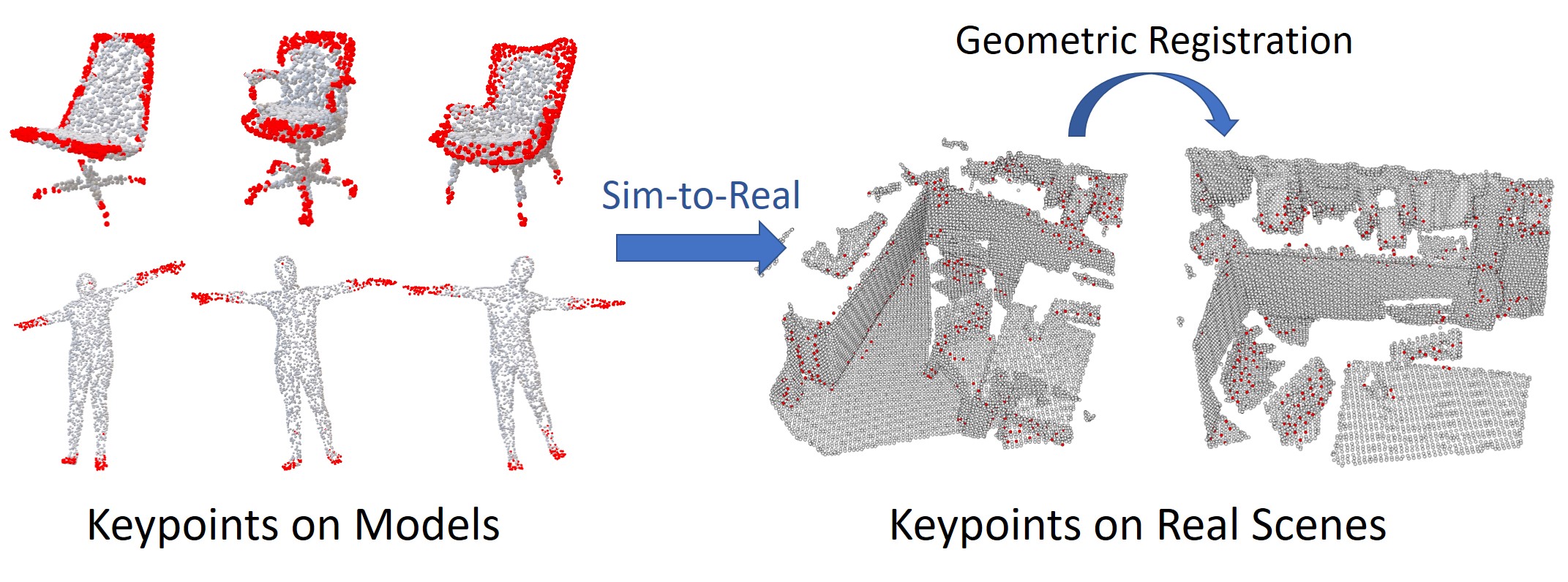

UKPGAN is a self-supervised 3D keypoint detector on both rigid/non-rigid objects and real scenes. Note that our keypoint detector solely depends on local features and is both translational and rotational invariant.

Abstract

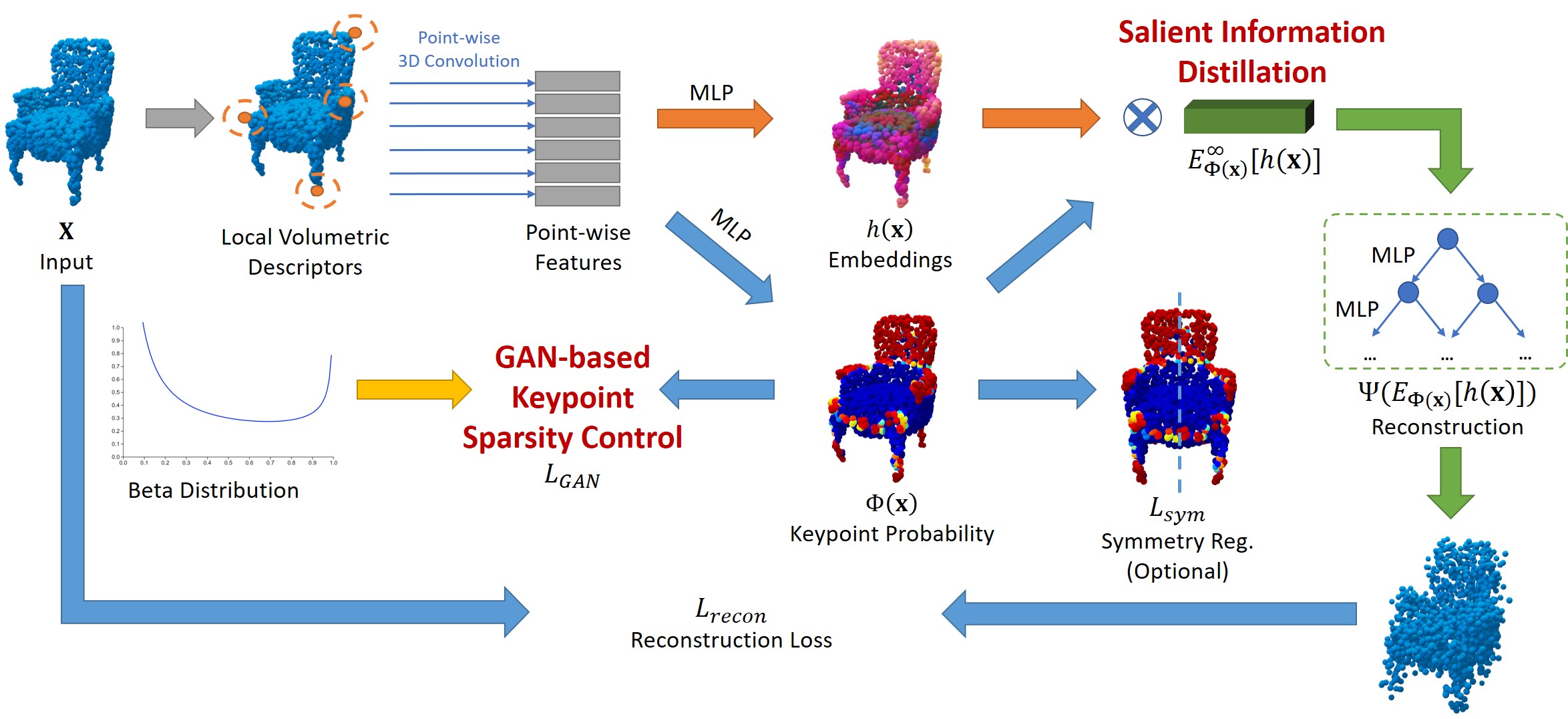

Keypoint detection is an essential component for the object registration and alignment. In this work, we reckon keypoint detection as information compression, and force the model to distill out irrelevant points of an object. Based on this, we propose UKPGAN, a general self-supervised 3D keypoint detector where keypoints are detected so that they could reconstruct the original object shape. Two modules: GAN-based keypoint sparsity control and salient information distillation modules are proposed to locate those important keypoints. Extensive experiments show that our keypoints align well with human annotated keypoint labels, and can be applied to SMPL human bodies under various non-rigid deformations. Furthermore, our keypoint detector trained on clean object collections generalizes well to real-world scenarios, thus further improves geometric registration when combined with off-the-shelf point descriptors. Repeatability experiments show that our model is stable under both rigid and non-rigid transformations, with local reference frame estimation.

Method Overview

Qualitative Results

Real-world Scenes

SMPL Models

With NMS

Without NMS (p > 0.5)

ShapeNet Models

With NMS

- Chairs

- Tables

- Airplanes

Without NMS (p > 0.5)

- Chairs

- Tables

- Airplanes

Citation

@inproceedings{you2022ukpgan,

title={UKPGAN: A General Self-Supervised Keypoint Detector},

author={You, Yang and Liu, Wenhai and Ze, Yanjie and Li, Yong-Lu and Wang, Weiming and Lu, Cewu},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2022}

}