Abstract

Object pose estimation constitutes a critical area within the domain of 3D vision. While contemporary state-of-the-art methods that leverage real-world pose annotations have demonstrated commendable performance, the procurement of such real training data incurs substantial costs. This paper focuses on a specific setting wherein only 3D CAD models are utilized as a priori knowledge, devoid of any background or clutter information. We introduce a novel method, CPPF++, designed for sim-to-real category-level pose estimation. This method builds upon the foundational point-pair voting scheme of CPPF, reformulating it through a probabilistic view. To address the challenge posed by vote collision, we propose a novel approach that involves modeling the voting uncertainty by estimating the probabilistic distribution of each point pair within the canonical space. Furthermore, we augment the contextual information provided by each voting unit through the introduction of $N$-point tuples. To enhance the robustness and accuracy of the model, we incorporate several innovative modules, including noisy pair filtering, online alignment optimization, and a tuple feature ensemble. Alongside these methodological advancements, we introduce a new category-level pose estimation dataset, named DiversePose 300. Empirical evidence demonstrates that our method significantly surpasses previous sim-to-real approaches and achieves comparable or superior performance on novel datasets.

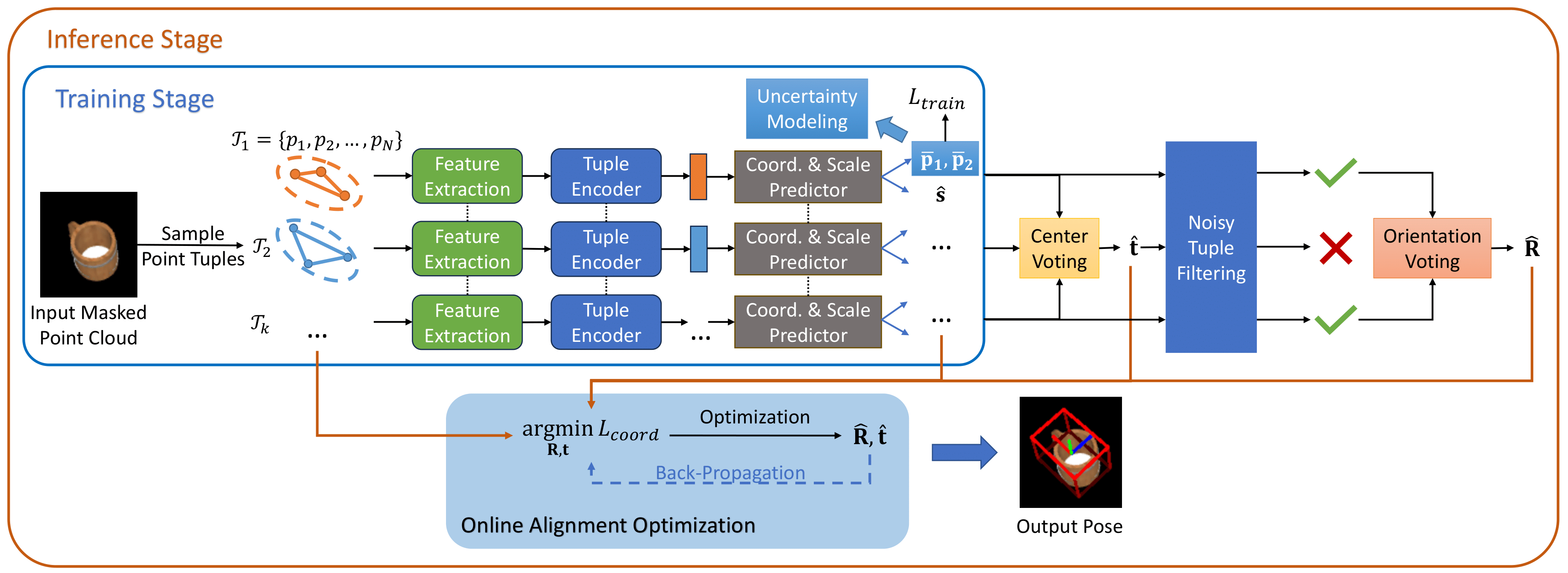

Our pipeline commences with a masked point cloud input, derived from an off-the-shelf instance segmentation model. Subsequently, point tuples are randomly sampled from the object. Features for each tuple are extracted and fed into a tuple encoder to obtain the tuple embedding. Following is the prediction of the canonical coordinate and scale of each tuple. During inference, the computed canonical coordinates and scales are utilized to vote for the object's center. To mitigate the influence of erroneous tuple samples, we introduce a noisy pair filtering module, enabling the orientation vote to be cast exclusively by reliable point tuples. Finally, an online alignment optimization step is employed to further refine the predicted rotation and translation, enhancing the accuracy of our model's output.

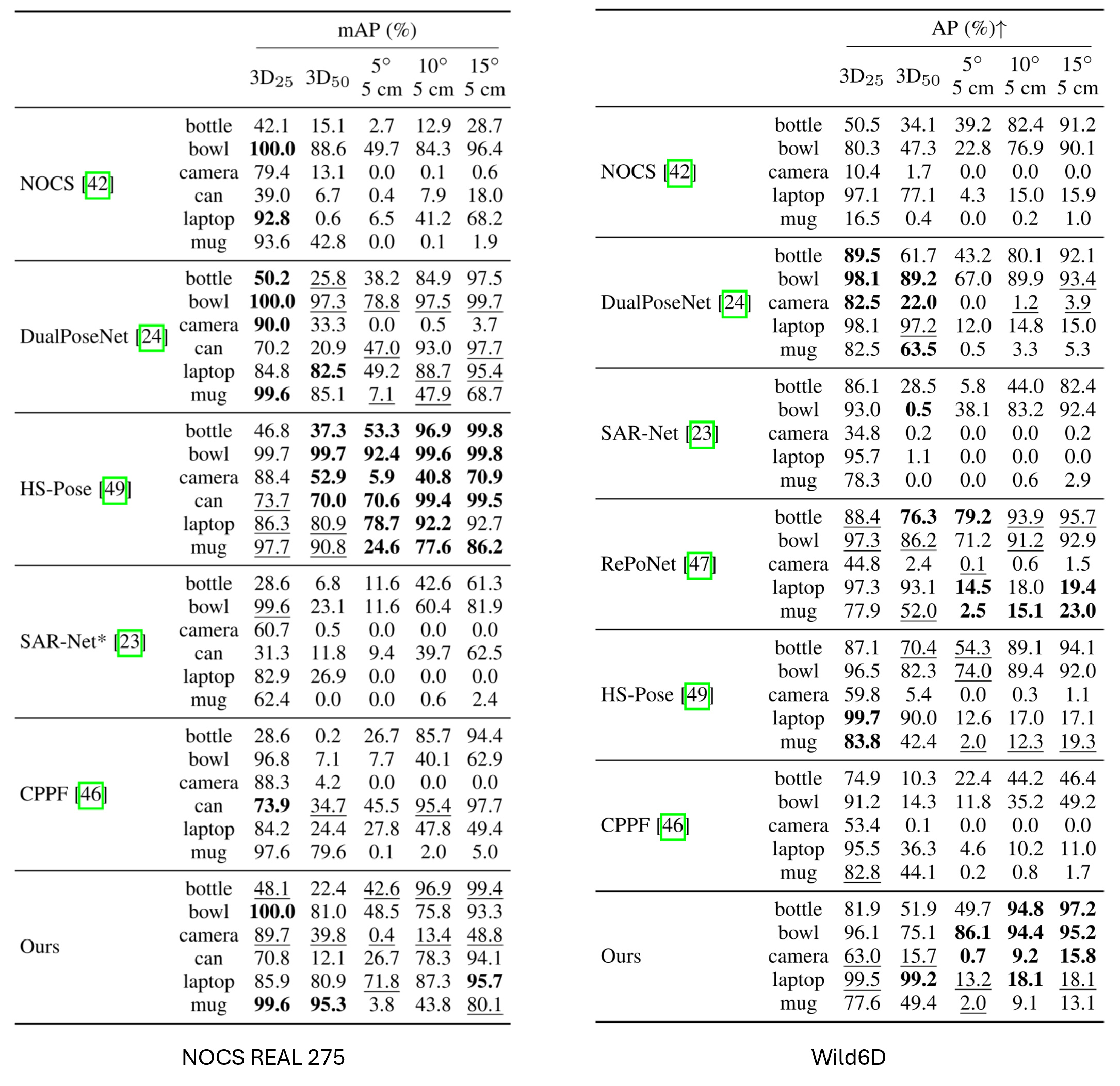

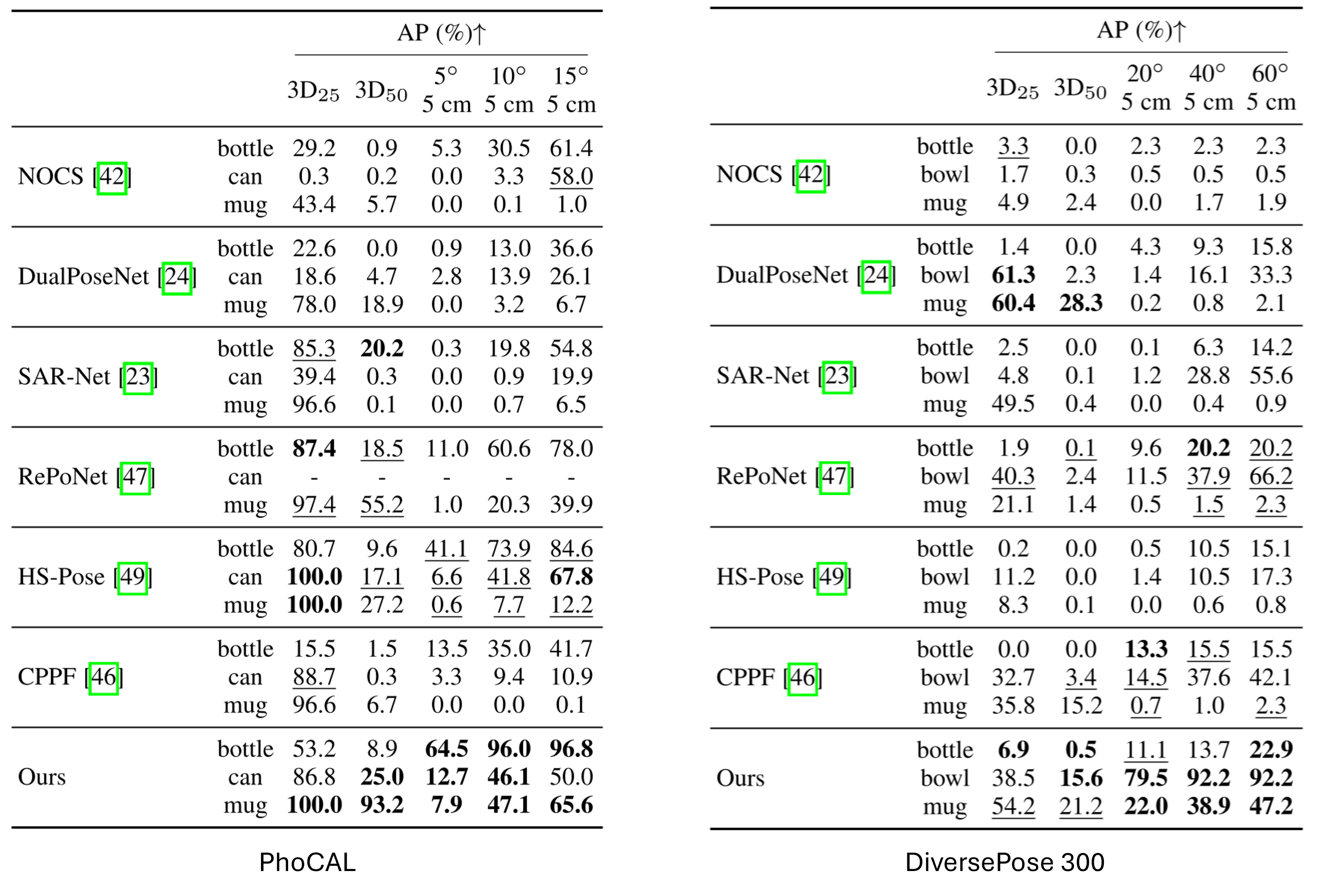

SotA Performance on Multiple Datasets

(best in bold and second-best underscored)